Abstract

Abstract – This study describes a method to help visually challenged people perform daily tasks. The main purpose of this device is to help visually challenged people to a safe and convenient solution to overcome their obstacles. The project aims for flexibility, affordability, portability, simplicity in design, and practicality for the user. The proposed system uses the ultrasonic sensors which is used to identify the user’s problems, identify them by processing images, and set alarms via a Bluetooth-enabled audio output device. This approach allows users to discuss issues more easily and safely. The GPS for locating the person and sending the emergency SMS message for third person in any emergency situations.

Keywords- Image Processing, GPS, Object Detection, Text-to-Speech, Blynk IOT, Smart Belt, YOLO Algorithm.

Introduction

The impact of Blindness, vision loss, blindness, and the effects of blindness are significant and have a significant impact on individuals with these conditions. Beyond affecting their physical health, these conditions bring about social, emotional, and financial challenges, substantially impacting overall well-being and hindering the ability to carry out daily activities. In the contemporary world, technology and human life are inseparable, presenting an opportunity to alleviate some of these challenges. Technological advancements have the potential to empower visually impaired individuals and address insecurities faced by the blind.

Blind individuals encounter difficulties in determining obstacles and gauging distances, relying heavily on others for mobility and feeling vulnerable in unfamiliar environments. Existing aids often provide limited support and flexibility. Researchers have undertaken various studies to enhance the exploration capabilities of those suffering from blindness, striving to develop devices that

promote independent movement. This project integrates ultrasonic sensors, cameras, vibration sensors, Bluetooth

modules, and rechargeable batteries, connect them to the controller. The controller receives signals from ultrasonic sensors to detect problems, outputs patterns from vibration sensors, and outputs audio via Bluetooth. This integrated approach finds to enhance independence and their safety of blind persons to navigating in their surroundings.

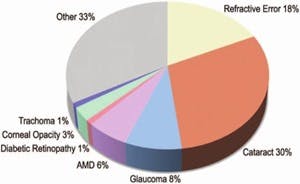

With reference to the WHO (World Health Organization) survey taken in the year 2020, it gives the data about visually impaired people.

The visual impairment is a condition that affects the millions of people in worldwide. According to the WHO, approximately 253 million people were visually impaired in 2020, of which 36 million were blind. Most who are blind people are aged 50 or older and are caused by cataracts and untreated vision loss. In addition, low- and middle-income countries are disproportionately responsible, accounting for 89% of global blindness cases. Despite advances in healthcare, a significant number of vision-impaired individuals lack access to essential eye care services, and their issue.

Individuals with visual challenges encounter numerous difficulties in their daily lives, including obstacle detection, issues related to social security, and challenges in navigation. Unfortunately, many have faced adverse consequences due to the lack of technological advancements catering to their needs. To address these challenges, we have developed a specialized device designed to enhance their safety and navigation of blind people individuals. Our goal is to empower the blind by creating a Smart Blind Device that fosters a sense of security, allowing users to walk independently without relying on others. In a world marked by constant technological advancements and a culture of self-reliance, we present a cost-effective, flexible, and user-friendly solution: the Smart Blind Belt, aimed at promoting independence among the visually challenged.

Implementation and Methodology

The tool is ready with quite a number additives, together with a camera, vibration sensors, ultrasonic sensors, microprocessor, Bluetooth-enabled audio output device, rechargeable power banks/batteries, and a GPS module. Within the impediment detection factor of the system, a single ultrasonic sensor serves because the input, while vibration sensors act as the output, it really works on microcontrollers to obtain and send indicators. Arduino at the side of ultrasonic sensor alerts the character with a buzz sound as audio output and a vibration signal while there's any impediment below 70 to seventy-five cm.

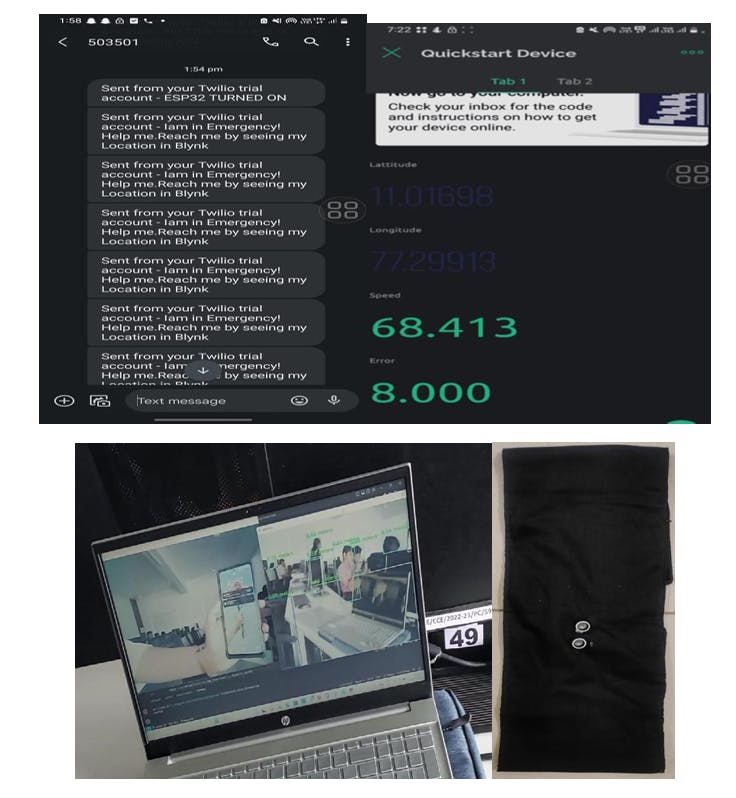

The Stay area of the Visually impaired people may be seen through everyone using the person identity and password supplied. Every movement of person can be monitored by the care taker with the help of devices ESP32 CAM. When the visually impaired feels unsecured he/she can press a button to send emergence SOS via SMS using ESP32 module with the help of an API.

The flow chart gives the functional model of smart Blind Belt. The GPS system is powered by a battery, and it is attached to a power source. The power source is used to power the GPS system, and the battery is used to power the GPS system when it is not connected to a power source. The GPS system also has a GPS NEO-6M module, which is used to receive signals from satellites. The GPS NEO-6M module is connected to an ESP8266 microcontroller, which is used to process the signals from the GPS NEO-6M module. The ESP8266 microcontroller is also connected to an ESP32 CAM module, which is used to take pictures.

The GPS system works as follows:

The GPS NEO-6M module receives signals from satellites. The ESP8266 microcontroller processes the signals from the GPS NEO-6M module and determines the location of the GPS system.

The GPS Neo-6M module captures latitude and longitude information by triangulating signals from multiple satellites in orbit. The module's integrated microcontroller processes these signals and outputs the location data in the Blynk app, which can then be used by external devices for a variety of applications and location-based services.

Known products for these devices include cameras and imaging devices. Product information includes image processing and visualization, capable of detecting instances of various objects within a given input frame. Objects are differentiated based on unique features, defining distinct categories. For instance, objects with a round shape in a 2-dimensional plane fall into the category of circles.

Object detection and identification typically fall under either deep learning or machine learning approaches. The manner includes converting video enter into frames, Segmenting and decomposing the ones frames for function extraction. The Extracted features are then in comparison with a database the usage of a classifier to specify the identified item.

The camera module is a portable device supporting ESP32 CAM, equipped with an OV2640 image sensor. Communication with the camera occurs through a parallel interface, with captured data processed by the ESP32 for applications such as image capture and video streaming.

Serial communication, facilitated by the ESP32-CAM, allows interaction with other devices using UART or similar protocols. Commonly used for debugging and interfacing, it finds applications in machine learning, surveillance, and image processing, acquiring real-time environmental inputs.

Segmentation and decomposition of the input video into images are based on the frame rate. The process involves image segmentation, feature extraction, and classification/identification:

Image Segmentation and Decomposition: Converts the video into images based on the frame rate.

Feature Extraction: Segments are collected and undergo iage extraction using edge detection, with pixels sharing similar characteristics.

Classification/Identification: Involves compression and thresholding, extracting required features for further processing.

Feature extraction is very important and forms a significant part of the dimensionally reduced data required for this process. This method assigns more significance to specific data describing the object while reducing the overall data volume. The reduced data set enhances learning speed by decreasing memory requirements and facilitating faster computation power during analysis. Different objects can be classified based on the features can be extracted in the time of extraction process.

Then the attributes are extracted, the data is divided in parts groups according to their characteristics and the process is learned.

The audio enabled output and the camera input are used within the distance dimension system. The gadget uses triangulation to determine the gap of an object from the camera.

The principle of the similar triangle is that the product of the camera distance (D) and its width (W) can be used for distance estimation. In this method, an image is taken and the measured pixel size (P) is used to calculate the focal length (F) of the camera using the formula below:

F = ( P x D ) / W

Suppose you have a different camera setup, and you place a 6 x 9 inches of notebook at a distance of 30 inches from the camera. The resulting image width (P) is approximately 210 pixels. Now, you want to calculate the camera's focal length (F) using the formula:

F = (210 x 30) / 6 = 6300 / 6 = 1050

It is worth noting that even if the camera is in motion, near or far from the object, the triangulation method is still suitable for determining the final distance of an object from the camera:

D'=(width x Focal Point) / Pixels

Modules

In our research, we employed advanced hardware components, including the ESP32 CAM module to enhance image processing and data communication capabilities. Additionally, we have used Arduino Uno which is used as microcontroller that integrates the vibrator and buzzer, Ultrasonic sensor which is kept inside the belt and integration with GPS NEO-6M module and ESP8266 module facilitated comprehensive data acquisition, enabling a robust and versatile system for our project.

Results and Discussions

This system excels in real-time object detection by analyzing video feed input from a camera. It categorizes objects based on their distinctive features and promptly notifies visually impaired individuals. Notifications are delivered through a vibration mechanism and audio alerts transmitted to a Bluetooth earphone, enhancing the user's awareness of their surroundings.

The GPS module is used here to detect the Specific location of the person it gives the Latitude and Altitude of the current location in the Blynk APP. The SMS alert message send through the API for external person through the SMS as that the blind person presses the Alert button in the Smart belt. The entire IOT modules are kept inside the belt.

Results Our innovative assistive technology seamlessly integrates various components to enhance the independence and safety of visually impaired individuals. By the help of ESP32-camera system, our solution facilitates object detection through advanced image processing and it identifies the object with speech which is connected to Bluetooth enabled audio output. By this enables users to gain valuable insights into their surroundings, empowering them to navigate with confidence.

In our smart belt, equipped with the Arduino UNO microcontrollers, vibrators, buzzers, and an ultrasonic sensor, serves as a tactile feedback system. This wearable technology not only provides haptic alerts for obstacles detected by the ultrasonic sensor but also incorporates GPS tracking functionality. The GPS interfaces with the BLYNK IoT platform, relaying critical information such as location coordinates, speed movement of the person gives real-time speed information is instrumental for enhancing the safety of visually impaired individuals as they navigate their surroundings. Furthermore, the speed movement data can be valuable for monitoring and optimizing the overall user experience. It enables the adjustment of alerts and notifications based on different movement speeds. For instance, a user moving at a faster speed might benefit from more frequent or advanced obstacle alerts, while a slower pace may warrant different types of notifications.

Including satellite availability in the BLYNK IoT is the platform which is used as assistive technology solution plays a crucial role in the enhancing the reliability and accuracy of location tracking for blind people individuals. The number of available satellites is indicative of the GPS signal strength and the system's ability to accurately determine the user's location. This information is vital for ensuring the precision of the GPS coordinates reported by the smart belt. In scenarios where users are navigating in urban environments, surrounded by tall buildings or obstructed views, satellite availability can help assess the potential accuracy limitations of the GPS data. If the number of available satellites is low, it may signal that the GPS signal is weak or obstructed, prompting the system to provide a cautionary notification to the user or caretaker. This proactive approach ensures that users receive more reliable location information, contributing to the safer and more effective navigation experience for those with visual impairments.

In the case of an emergency or the user pressing a designated button, an SMS alert is automatically dispatched to a predefined care taker, ensuring swift assistance and peace of mind for both the user and their caretaker.

From our project device is designed for outdoor use. This system excels in various environmental conditions. Boasting low power consumption ensures energy efficiency for prolonged operation. Utilizing rechargeable batteries, the system offers a sustainable and convenient power source. With a compact and waterproof design, it ensures durability and functionality in diverse weather conditions. Cost-effective in nature, the system provides an affordable solution without compromising performance. Delivering flexibility and comfort in wear, it is designed with user convenience in mind.

Conclusion

We believe in transcending limitations through technological innovation rather than accepting them. Our device not only enhances safety but also streamlines manufacturing processes by minimizing human intervention. Specifically designed to aid the visually impaired, our system enables individuals to navigate independently, eliminating the need for constant assistance. By identifying obstacles and their distances, the system empowers the blind to navigate with ease and avoid potential hazards. It stands out for its practicality, flexibility, and cost-effectiveness. Operating in real-time, the system prioritizes safety and security, ultimately striving to improve the quality of life for those it serves.

In Future it can be enhanced by integrating some medical features such as Health monitoring like Pulse rate, Temperature and with Voice assistance.